The Ethics of Facial Recognition Technology in a World of AI

14 July 2025

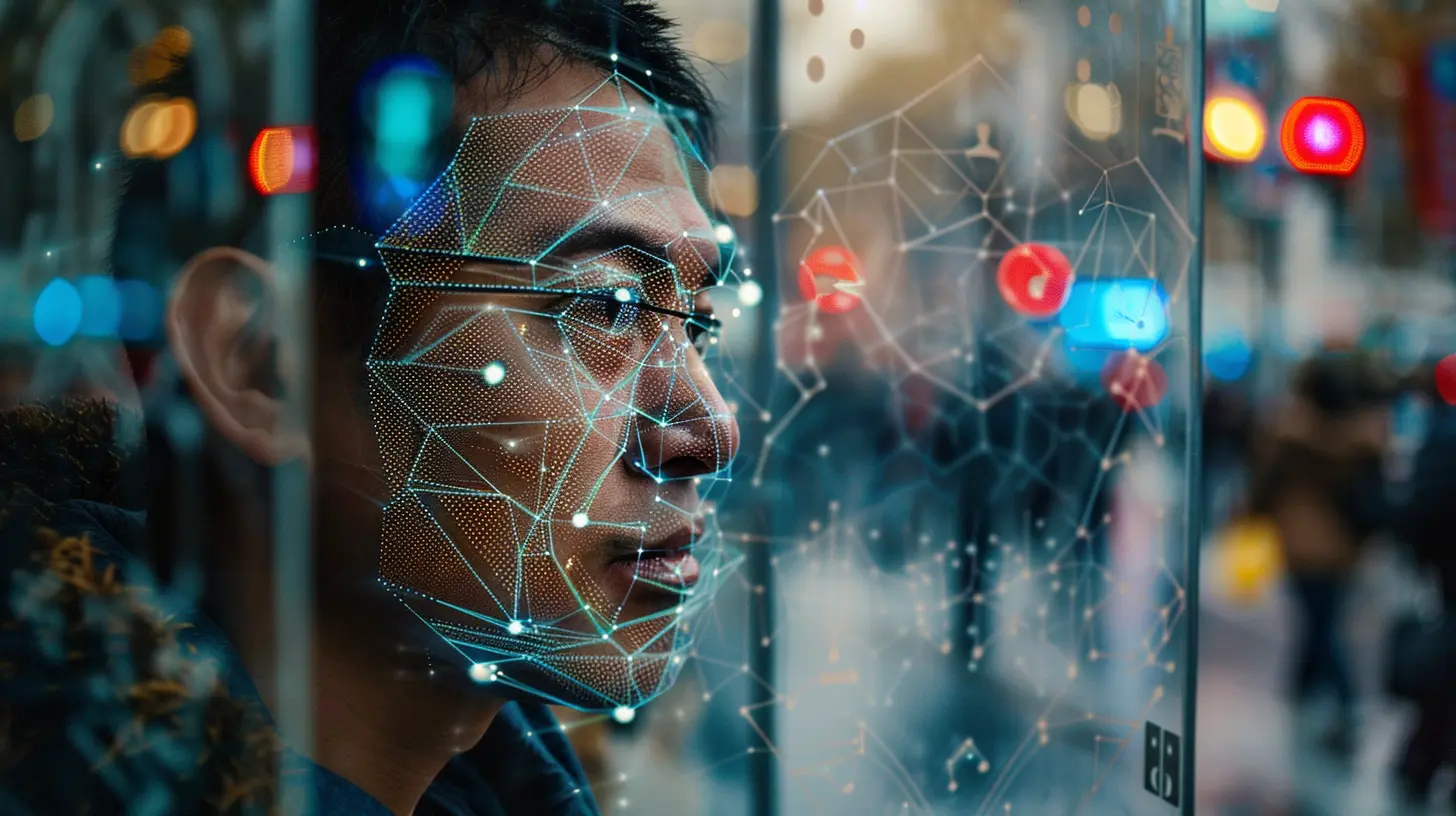

Facial recognition technology used to feel like something straight out of a sci-fi movie, didn’t it? One minute we're watching Tom Cruise in Minority Report, and the next thing we know, our phones are unlocking just by looking at our faces. Kinda cool… and kinda creepy, right?

So, here we are — smack dab in the middle of an AI revolution. Machine learning systems are evolving faster than we can say "algorithm." Facial recognition, specifically, has become one of the most controversial aspects of this technological boom. As convenient as it may seem, it drags along a giant suitcase of ethical concerns.

Let’s break it all down, peel back the digital layers, and talk about what’s really going on behind that smiling profile pic.

What Is Facial Recognition Technology Anyway?

Alright, let’s not assume everyone’s a tech whiz.Facial recognition technology (FRT) is a type of biometric software that maps out a person’s facial features and uses them to identify or verify their identity. Think of it as a supercharged digital faceprint. It collects data points like the distance between your eyes, your jawline's curve, or even how your eyebrow arches. Yes, your eyebrow is now an ID.

This tech is being used everywhere — unlocking smartphones, tagging friends on social media, airport security, law enforcement, retail stores, and even in some schools. Sounds pretty versatile, right? Almost too versatile.

Why It’s a Big Deal (In A Good Way…)

Let’s give facial recognition some credit where it’s due.- Convenience: Logging in without a password? Yes, please.

- Security: It adds an extra layer of protection to our devices.

- Crime Reduction: Law enforcement uses it to track down suspects.

- Missing Person Cases: It has even helped locate lost children or missing individuals.

It’s not all doom and gloom. In certain use-cases, like finding abducted children or improving airport safety, this tech is doing incredible things.

But — and it’s a big but — with great power comes great responsibility (yes, I just quoted Spider-Man).

When Things Get Sketchy: The Ethical Red Flags

Here’s where the conversation gets uncomfortable. While facial recognition can serve powerful and positive purposes, it can also backfire dramatically when misused or poorly regulated. Let's talk ethics, shall we?1. Privacy Invasion

Imagine walking through a shopping mall and having every camera analyze your face. Not just to keep you safe but also to track your shopping habits, emotions, and maybe even store your location data. Creepy, right?Facial recognition often works without explicit consent. Most people don’t even know when it’s being used. That’s a serious violation of personal privacy. When our faces become data points, do we even own them anymore?

2. Inaccuracies & Bias

AI isn't perfect. In fact, facial recognition algorithms have shown alarming error rates, especially when identifying people of color, women, or non-binary individuals. Several studies have found that these systems perform far better on light-skinned males than on any other group.It’s not just a tech glitch — it’s a human rights issue. If the software misidentifies someone and they're arrested or barred from access to a service, that’s not just inconvenient. It's devastating.

3. Surveillance Overreach

Totalitarian regimes have already started using facial recognition to monitor and suppress citizens. China’s social credit system is a prime example, where every move is tracked, scored, and judged. Sounds dystopian? That’s because it is.Could such surveillance tactics creep into democratic societies? Possibly. When governments collect facial data under the guise of “public safety,” it raises a lot of eyebrows about overreach and surveillance abuse.

4. Consent & Transparency

Would you feel comfortable knowing your face is in a database you never signed up for? Didn’t think so.Many companies and even government bodies don't seek permission before collecting people's facial data. There’s often little to no transparency about how long the data is stored, who has access to it, or how it’s protected. That's a recipe for mistrust.

Who’s Responsible for Regulating This?

That’s one of the biggest ethical questions of all. Who gets to decide how this technology is used? Governments? Tech giants? Independent watchdog groups?Right now, it's kind of the Wild West.

- In the U.S., there’s no comprehensive federal law governing facial recognition.

- In the EU, the GDPR sets some boundaries, but enforcement varies.

- Some cities like San Francisco and Boston have banned its use by public agencies.

But we still lack global standards. And when technology travels faster than legislation, you end up with systems that are under-regulated, inconsistent, and, frankly, dangerous.

Ethics Aren’t Just Academic

We're not talking about hypothetical scenarios here — the ethical dilemmas around facial recognition are affecting real people every day. Let me throw a few real-world examples your way:- In 2020, an innocent Black man in Detroit was wrongfully arrested due to a false facial recognition match.

- Clearview AI reportedly scraped billions of images from social media without consent to build its facial recognition tool — sparking legal and moral outrage.

- Some schools and workplaces have implemented facial scanning for attendance or monitoring, triggering serious privacy concerns among students and employees.

This isn’t just an engineering problem. It's a human one.

Can Facial Recognition Be Ethical?

It’s not all hopeless, I promise.Here’s the twist: Facial recognition can be ethical — if it’s designed and used the right way. But that takes effort, accountability, and most importantly, empathy.

Here are a few things that can help turn this tech around:

1. Opt-In Systems

People should have the choice to participate. Full stop. If a system wants to scan your face, it should ask first. Transparent opt-in methods could go a long way in rebuilding trust.2. Better Training Data

The biases in facial recognition systems often come from poor-quality or non-diverse training data. If AI learns from biased data, it’ll produce biased results. Companies need to invest in fair, inclusive data sets.3. Strict Regulation and Audits

We need clear laws outlining who can use this tech, how they can use it, and what happens when they misuse it. Periodic audits can keep these systems accountable.4. Data Minimization

Only collect what you absolutely need — and nothing more. The same way we don’t need to give our entire life story to download an app, recognition systems shouldn’t require our entire biometric identity for minor conveniences.The Slippery Slope of “It’s Just for Security”

Ever heard the phrase “if you’re not doing anything wrong, you don’t have anything to worry about”? It sounds convincing — until it’s used to justify constant monitoring.Security is important, no doubt. But who defines what counts as "suspicious"? And how many rights are we willing to trade for a sense of safety?

This logic often ends up silencing dissent, marginalizing communities, and justifying surveillance creep — the gradual but persistent increase of monitoring in everyday life.

The Role of Tech Companies

Let’s be honest — big tech companies are the gatekeepers here. They’re the ones creating the software and setting the stage for how it gets used.Companies like Microsoft, Amazon, and Google have all paused or limited their facial recognition programs in some capacity due to ethical concerns. That’s a start. But self-regulation only goes so far.

These companies need to integrate ethics into their development processes. Not just as an afterthought, but right from the beginning. Because by the time the public pushes back, the damage might already be done.

What Can You — the Average Joe — Do About It?

Glad you asked.You don’t need to be an AI researcher to care about this. As citizens, consumers, and digital participants, we all have a role in shaping how facial recognition impacts our world.

- Stay informed: Knowing how and where this tech is used helps you make better decisions.

- Read the fine print: Before you upload that selfie or sign up for that new platform, check their privacy terms.

- Support regulations: Advocate for ethical legislation that keeps companies and governments in check.

- Ask questions: Don’t be afraid to challenge institutions that use facial recognition. It’s your face, after all.

Wrapping It Up: Faces, Freedom & The Future

The rise of facial recognition technology is a classic case of progress meeting principle. It’s shiny, powerful, and full of possibilities — but without ethical foundations, it can quickly go off the rails.At the end of the day, this isn’t just about technology. It’s about trust, consent, fairness, and freedom. Our faces are more than just data points. They’re a part of who we are — and we deserve to control how they’re used.

As we move deeper into the age of AI, we’ll need to make some tough decisions. But with awareness, advocacy, and a whole lot of ethical consideration, we can shape a future where technology respects the very people it’s meant to serve.

Because if our faces are going to power the future, they better be treated with the dignity they deserve.

all images in this post were generated using AI tools

Category:

Ai EthicsAuthor:

Marcus Gray

Discussion

rate this article

1 comments

Nixie McFadden

Facial recognition technology raises significant ethical concerns, including privacy violations, bias, and surveillance. As AI advances, establishing clear regulations and ethical guidelines is crucial to ensure responsible use and protect individual rights.

July 28, 2025 at 4:13 AM

Marcus Gray

Thank you for highlighting these important concerns. I agree that establishing clear regulations and ethical guidelines is essential as we navigate the complexities of facial recognition technology in the evolving landscape of AI.